It’s a striking headline, and the Guardian’s “New AI fake text generator may be too dangerous to release, say creators” story has gotten plenty of attention over the past couple weeks.

OpenAI, an nonprofit research company backed by Elon Musk, Reid Hoffman, Sam Altman, and others, says its new AI model, called GPT2 is so good and the risk of malicious use so high that it is breaking from its normal practice of releasing the full research to the public in order to allow more time to discuss the ramifications of the technological breakthrough.

There are ample flags to trigger a bullshit detector. Elon Musk’s presence alone justifies some skepticism. And “this new research is just too hot for release” is a solid hype-monger play.

But the claims don’t really represent a massive leap from what’s already being rolled out, particularly for journalism. Bloomberg uses AI to write rapid-fire news summaries, and Reuters is using it to “augment human journalism by identifying trends, key facts and suggesting new stories reporters should write”. The Washington Post has been posting robot-written stories for years.

And the Guardian seems to have done some independent verification of GPT2’s claims:

…in Friday’s print Guardian we ran an article that GPT2 had written itself (it wrote its own made-up quotes; structured its own paragraphs; added its own “facts”) and at present we have not published that piece online, because we couldn’t figure out a way that would nullify the risk of it being taken as real if viewed out of context.

A more detailed rundown of the technology, and examples of its responses to specific tasks are available on the OpenAI site. You can start at Better Language Models and Their Implications.

Like so many advances in technology these days, the natural response is likely to be a combination of awe, admiration, fear and contempt. Understandably, the potential for negative effects has focused on the potential for generating “fake news” and other forms of fraudulent behavior. OpenAI articulates some malicious uses, among them:

- Generate misleading news articles

- Impersonate others online

- Automate the production of abusive or faked content to post on social media

- Automate the production of spam/phishing content

And since it’s already happening, there is concern expressed about the future for journalists as well. And man, no shortage of reasons to worry about that. Besides obviously fake stories, I can’t help but think of the potential to automate and ramp up the hot take industry in politics, sports, entertainment, etc… We will be buried in takes.

But the expressed scope of these potential dangers seems almost comically narrow — haven’t people been paying attention to how we are living in the 21st Century?

Whatever you think of it, there are reasons that David Graeber’s essay “On the Phenomenon of Bullshit Jobs: A Work Rant” resonated so strongly that it became a book.

Say what you like about nurses, garbage collectors, or mechanics, it’s obvious that were they to vanish in a puff of smoke, the results would be immediate and catastrophic. A world without teachers or dock-workers would soon be in trouble, and even one without science fiction writers or ska musicians would clearly be a lesser place. It’s not entirely clear how humanity would suffer were all private equity CEOs, lobbyists, PR researchers, actuaries, telemarketers, bailiffs or legal consultants to similarly vanish. (Many suspect it might markedly improve.) Yet apart from a handful of well-touted exceptions (doctors), the rule holds surprisingly well.

When I read about “AI-powered text deepfakes” my immediate reaction is not “oh my, what will this mean to the future of journalism?” (Though I do worry about that.) Instead I plunge myself into how much of the text I am expected to consume, and in some cases to produce, that is indistinguishable from what an algorithm might spew out today, even without these latest AI-secret-sauce advances.

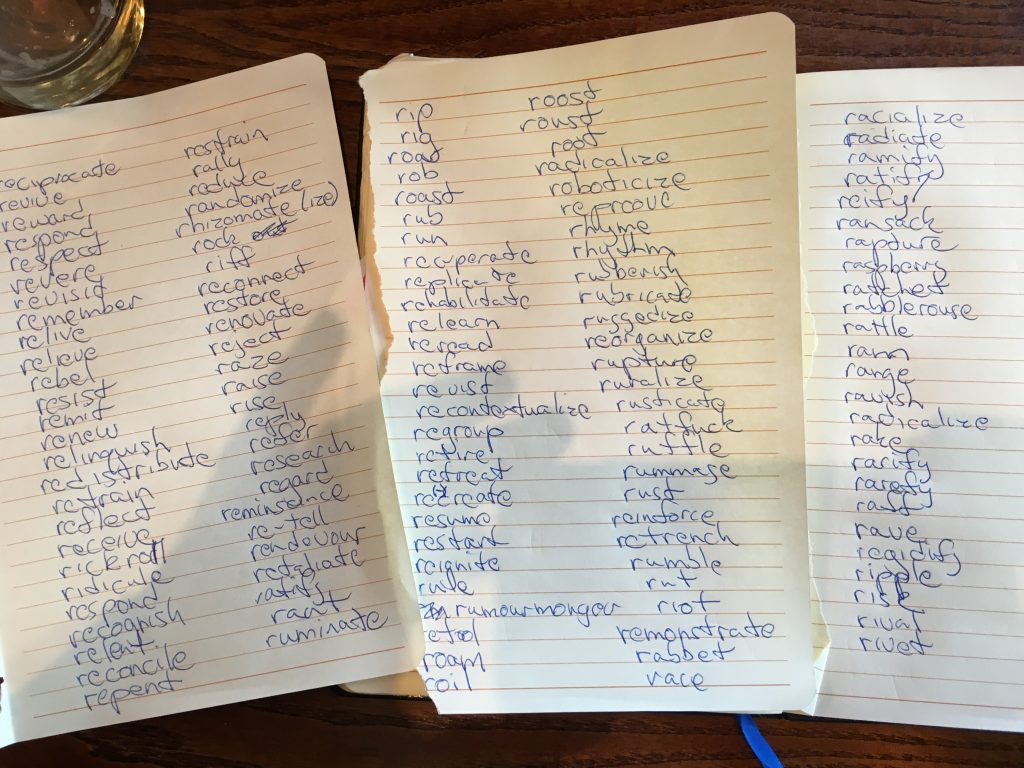

Word processing, databasing, recyclating—recycling, appropriation, intentional plagiarism, identity ciphering, intensive programming, as few are are really what’s constituting the new uh uh, literature.

So I’d love to show you a few examples of what I’m talking about. Um, hmm. Uh, heh. Um um um um um.

This is a uh uh a piece of uh… Huhhhh. This is a piece of uh…writing. Let me see if I can get it uhhhh so you can see it… — Kenneth Goldsmith

How many times are we confronted with writing that seems to have had all of its humanity systematically removed? There are the obvious examples. The unsolicited email sales pitch, followed up by “in case you missed my previous email”, and then by “I hope you will appreciate my persistence”… Well-calibrated to give the impression that the bot on the other end is a struggling human just like you. (Sometimes it gets the treatment.)

More than fake news, I’m thinking about that wide swath of professional communication that requires hard work to compose not because it demands individual expression or creativity, but because those impulses must be checked in favour of navigating norms and domain-specific expectations. I don’t diminish the skill required for things like administrative reports, proposals, and memos… They can be very hard to write, if only because of the demands for recognition and adherence to professional context. I’ve put a lot of effort into getting better at these sorts of tasks. Yet I can’t help but think that writing tools augmented by this sort of AI will be doing them better than me quite soon.

I was going to suggest that this technology might also challenge the hegemony of the essay for research and pedagogy in higher education. But if I’m honest, I expect that academics can more easily imagine the end of higher education than a discourse based on anything but term papers and publications.

The more far-out treatments of AI tend to explore the potential for programmed entities to develop sentience or even consciousness. But the effects play out both ways. In an interview to promote her new book Surveillance Capitalism, Shoshana Zuboff argues that it “is no longer enough to automate information flows about us; the goal now is to automate us.” And indeed, one of the core tenets of the Dumbularity is that even as machines take on more functions once reserved for humans, humans are being programmed and behaving as if they are machines.

It’s difficult to imagine a future in which these developments lead to a future with more authentic, varied and deeply-felt expressions of human experience.

Combine Deep Text™, This Person Is Not Real (fake portraits of people, generated by a machine learning adversarial network) https://thispersondoesnotexist.com , deep fake video (mapping the fake portrait onto a generated video – which could then be using very real text-to-speech to speak convincingly). A threat to education? Not likely. But, maybe, something that might get me out of having to give or participate in vendor webinars. Feed the bot some seed terms and hit “Go” – voila. Auto sales call participant(s), likely interacting with the bots used by vendors. The circle is complete.

Can I deep fake a vendor probe a’la Jolly Roger? (https://jollyrogertelephone.com/) … if so, where do I send my shitcoin?

“How many times are we confronted with writing that seems to have had all of its humanity systematically removed?” If you read academic writing the answer is almost certainly “Daily.” #zing